Navigating the Future of Software Development with AI

In the evolving landscape of software development, the adoption of Artificial Intelligence (AI) and Machine Learning (ML) has introduced both remarkable advancements and critical challenges. As organizations increasingly rely on these technologies, a surging wave of targeted cyber threats against developers and their infrastructure underscores the necessity for robust defense strategies. This article explores the implications of AI within software development, focusing on how businesses can navigate regulatory, quality, and security challenges to harness its full potential while mitigating inherent risks.

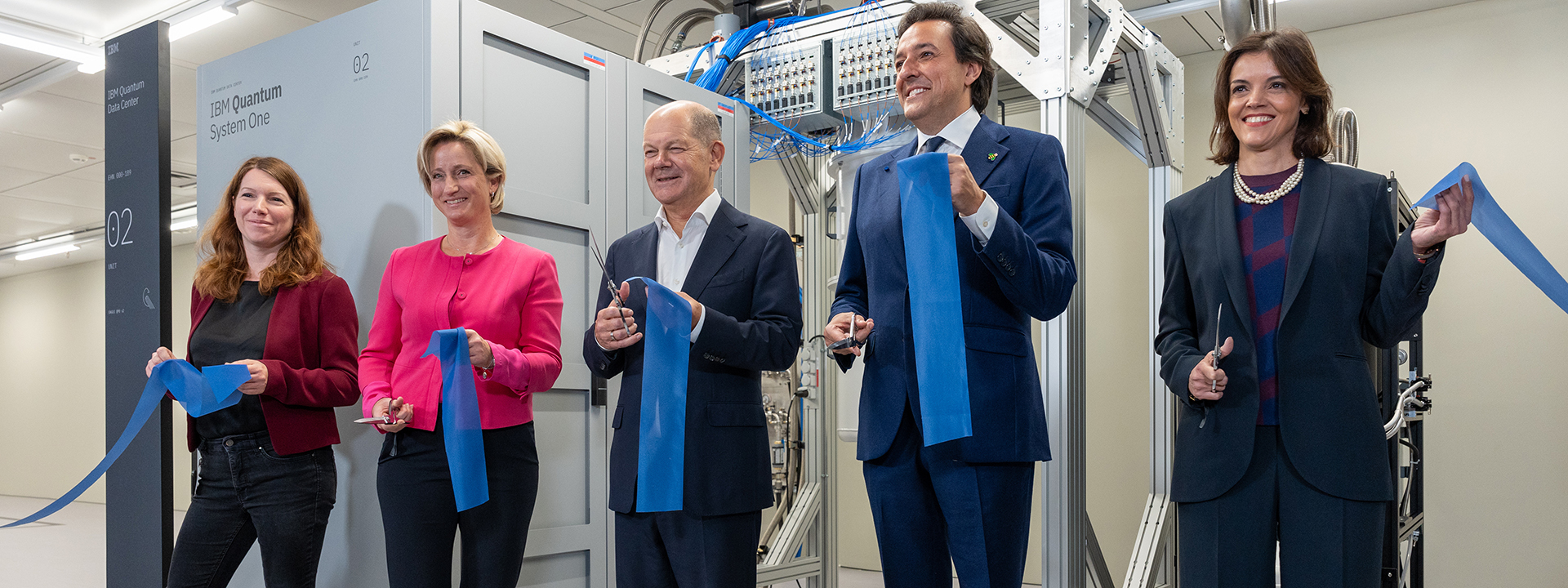

The intersection of AI and software development presents both opportunities and threats.

The intersection of AI and software development presents both opportunities and threats.

The Regulatory Landscape

While the EU AI Act is positioning itself as a regulatory touchstone, it is merely a fragment of a sprawling regulatory terrain. Alongside established regulations such as the EU GDPR and Cyber Resilience Act, the landscape demands a broader perspective. Organizations must not default to viewing the EU framework as the ultimate guide but should embrace a nuanced, risk-based strategy that accounts for diverse global regulations. Many experts argue that while the EU AI Act addresses some risks, it is overly stringent in certain aspects—specifically, its broad prohibitions on facial recognition—yet potentially lax in others, particularly concerning the appropriate governance of Generative AI tools and deepfake technologies.

Organizations are currently confronted with the reality that modernizing their infrastructures requires navigating a treacherous regulatory landscape. Strategies for implementing compliance must be proactive; regulations often take years to finalize and enact, but businesses stand to benefit from anticipating these changes and aligning their operations accordingly. As we boldly approach the regulations’ implementation phase between 2025 and 2027, many established firms find themselves saddled with legacy systems that complicate efforts for modernization. They’re left grappling with managing operational disruptions and inflated IT costs while trying to scale efficiently.

Quality in the Age of AI

Achieving consistent quality in software development has never been straightforward, and the integration of AI complicates matters. Developers encounter challenges rooted in the unpredictable nature of statistical models—essentially the backbone of innumerable AI and ML applications. The mantra remains: the quality of AI-driven outputs is only as reliable as the data fed into them. This reality can result in chaotic output due to issues like data drift and inherent biases.

To mitigate these concerns, developers must adopt rigorous data management and organizational practices. Each phase of the development lifecycle must emphasize quality assurance, transcending mere technical procedures into a cultural ethos centered on excellence. Ensuring data integrity and representation is critical to achieving deterministic outcomes from AI-driven models. As organizations strive for reliability, they must instill a cultural shift that prioritizes quality from the ground up.

Emphasizing Security

As AI technology becomes more prevalent, it also opens new doors for vulnerabilities that bad actors can exploit. The increasing popularity of languages like Python for AI development has accentuated this duality. Python’s accessible syntax and proficient libraries facilitate robust AI advancements, but they also leave significant security holes if not managed correctly. The prevalence of vulnerable ML models poses real threats, and organizations must prepare to meet the stringent security standards pushed by new regulations like the EU AI Act.

Security gaps are alarming; research highlights that leaked credentials can compromise substantial repositories. For instance, GitHub tokens that fall into malicious hands could grant unfettered access to resources including the Python Package Index (PyPI) and the Python Software Foundation (PSF). The ramifications of such breaches could extend to critical systems across financial, governmental, and eCommerce landscapes—making enhanced security measures within AI supply chains a paramount necessity.

The Complexity of AI and Future Directions

As AI and software development continue to intertwine, the complexities and risks multiply. Organizations ready to weather the storm will employ a proactive methodology across regulation, quality assurance, and security. This foresight will not only fortify defenses against emerging threats but will also represent a fundamental obligation for brands to flourish in an interconnected digital economy.

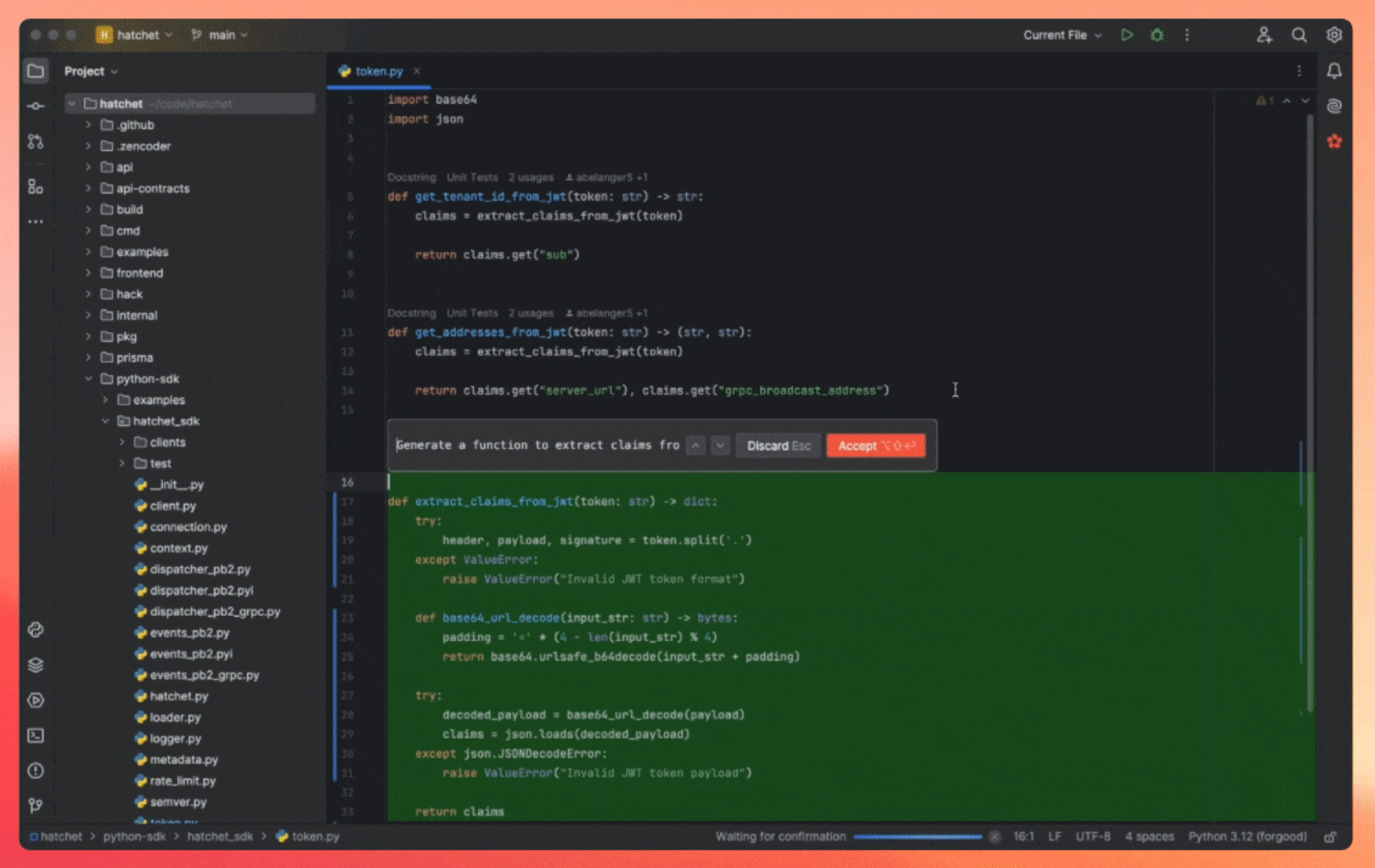

To illustrate solutions within this evolving domain, consider the launch of Zencoder, an AI startup that’s redefining the software development process through advanced coding agents. Founded by Andrew Filev, Zencoder’s innovative suite of generative AI agents promises Streamlining coding tasks through intelligent functionalities such as code generation, repair, documentation, and optimization. By seamlessly integrating AI agents into development workflows, this platform empowers developers to work faster and produce higher-quality applications.

“The use of the original coding assistant by a lot of developers is compared to StackOverflow on steroids,” said Filev, capturing the essence of leveraging intelligent assistants beyond mere suggestions. Zencoder’s agents are not just tools; they offer multistep coding capabilities along with self-repair functionalities that feedback into the development cycle, sharpening code generation further.

Zencoder’s advanced tools are setting new benchmarks in development efficiency.

Zencoder’s advanced tools are setting new benchmarks in development efficiency.

Conclusion

The time for decisive action is upon us. Embracing frameworks akin to the EU AI Act will become indispensable for companies aiming to not just survive but thrive. In an era where software development is increasingly synonymous with AI, building competencies around regulatory adherence, quality, and fortified security is not only about compliance—it’s about strategic advantage. As these evolving demands reshape the landscape, the industry finds itself at a crucial juncture, balancing innovation with the pressing need for responsibility and integrity.

In short, businesses that recognize the intrinsic connection between successful AI integration and responsible governance stand to shape the future of software development—positioned not merely as participants but as leaders within a rapidly transforming industry.

Photo by

Photo by