The One Billion Row Challenge: How Does Python Stack Up?

The question of how fast a programming language can process and aggregate 1 billion rows of data has been gaining traction lately. Python, not being the most performant language out there, naturally doesn’t come to mind as the top contender for this challenge. However, with the rise of big data and the increasing need for efficient data processing, it’s essential to explore Python’s capabilities in this area.

Python’s data processing capabilities put to the test

Python’s data processing capabilities put to the test

The one billion row challenge is exploding in popularity, and it’s crucial to examine how well Python stacks up against other programming languages. Can Python keep up with the demands of big data, or will it falter under the pressure?

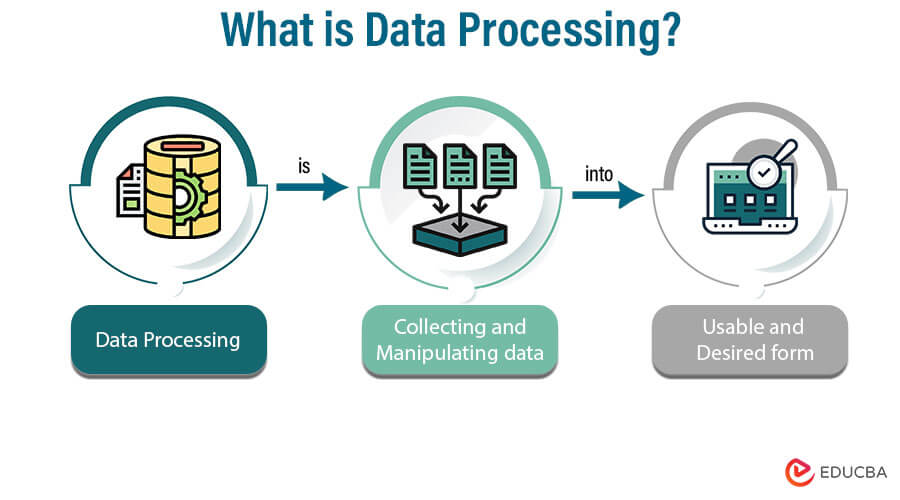

The Challenge of Processing Large Datasets

Processing large datasets is a daunting task, even for the most efficient programming languages. The sheer scale of the data can be overwhelming, and even the slightest inefficiency can lead to significant delays. Python, with its dynamic typing and interpreted nature, is often overlooked in favor of more performant languages like C++ or Java. However, Python’s versatility and extensive libraries make it an attractive choice for data processing.

The complexity of processing large datasets

The complexity of processing large datasets

Python’s Performance in Data Processing

So, how well does Python perform in the one billion row challenge? The answer is not straightforward. Python’s performance is heavily dependent on the specific implementation, the quality of the code, and the hardware it’s running on. However, with the right tools and optimizations, Python can hold its own against more performant languages.

“The question is not whether Python can process one billion rows of data, but how efficiently it can do so.” - Unknown

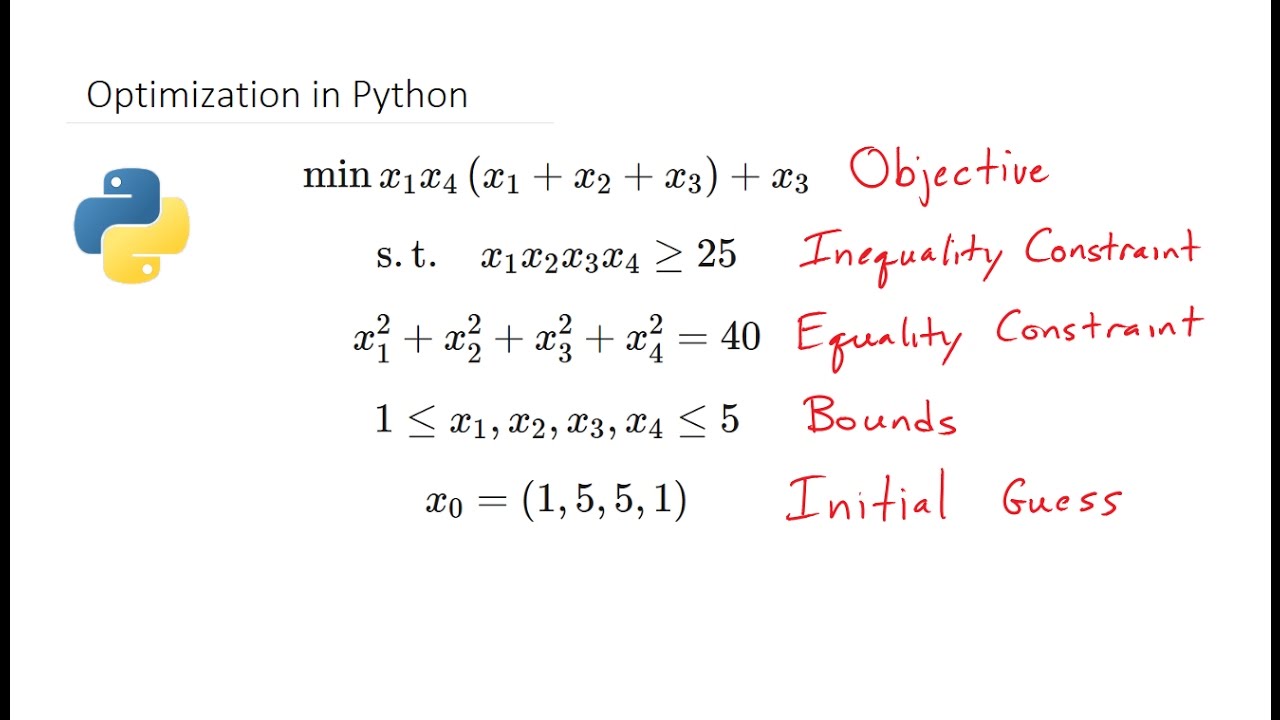

Optimizing Python for Data Processing

To optimize Python for data processing, it’s essential to leverage its strengths and mitigate its weaknesses. This can be achieved through the use of efficient data structures, parallel processing, and clever optimization techniques. By leveraging libraries like NumPy, Pandas, and Dask, Python can process large datasets with remarkable efficiency.

Optimizing Python for data processing

Optimizing Python for data processing

Conclusion

The one billion row challenge is a testament to the growing importance of efficient data processing. While Python may not be the most performant language, it’s certainly capable of holding its own in the world of big data. With the right optimizations and techniques, Python can process large datasets with remarkable efficiency, making it a valuable tool in the world of data science.

The future of data science

The future of data science